This is a brief summary of paper for me to study and arrange for Joint learning of character and word embeddings (Chen et al., IJCAI 2015) I read and studied.

This paper is a research ralted to word embedding to handle internal information with character.

The existing model for word embedding considers the external context of words without internal information of a word.

So they propose joint learning of character and word embeddigs as follows:

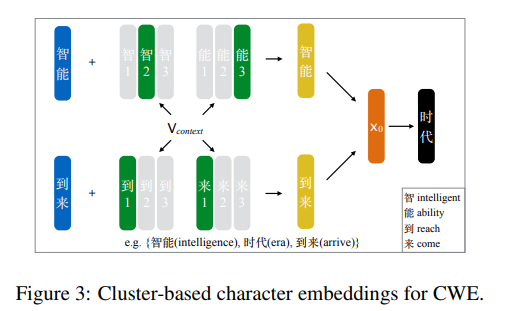

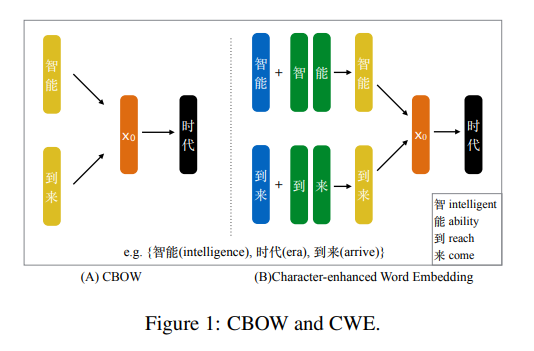

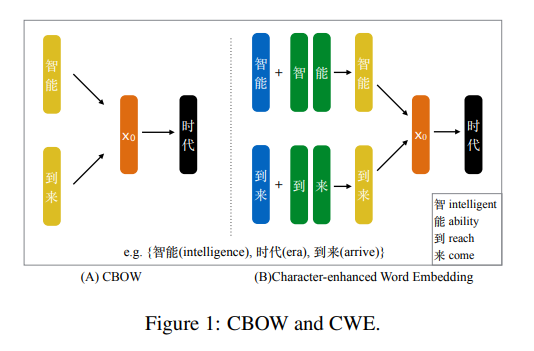

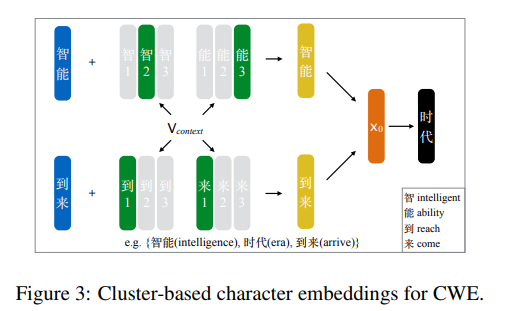

They propose various variants of joint learning of character word embeddings named as character-enhanced word embedding model (CWE)

- Position-based character embeddigs for CWE

- cluster-based character embedding for CWE

Most word embedding methods take a word as a basic unit and learn embeddings according to words’external contexts, ignoring the internal structures of words. However, in some languages such as Chinese, a word is usually composed of several characters and contains rich internal information. The semantic meaning of a word is also related to the meanings of its composing characters. Hence, they take Chinese for example, and present a characterenhanced word embedding model (CWE). In order to address the issues of character ambiguity and non-compositional words, they propose multiple prototype character embeddings and an effective word selection method. they evaluate the effectiveness of CWE on word relatedness computation and analogical reasoning. The results show that CWE outperforms other baseline methods which ignore internal character information.

Most word embedding methods take a word as a basic unit and learn embeddings according to words’external contexts, ignoring the internal structures of words. However, in some languages such as Chinese, a word is usually composed of several characters and contains rich internal information. The semantic meaning of a word is also related to the meanings of its composing characters. Hence, they take Chinese for example, and present a characterenhanced word embedding model (CWE). In order to address the issues of character ambiguity and non-compositional words, they propose multiple prototype character embeddings and an effective word selection method. they evaluate the effectiveness of CWE on word relatedness computation and analogical reasoning. The results show that CWE outperforms other baseline methods which ignore internal character information.

Note(Abstract):

Reference

- Paper

- How to use html for alert